In a statement released this week, we learn that San Francisco City Attorney David Chiu is attempting to spearhead the removal of websites, in what he calls a “first-of-its-kind,” suit. Chiu is going after sixteen companies, based in both the U.S. and abroad, claiming that the producers and posters of content at these sites are violating state and federal laws by making and posting deepfake pornography.

Chiu is also looking to sue over violations of California’s Unfair Competition Law and to get the sites on revenge pornography.

“Generative AI has enormous promise, but as with all new technologies, unintended consequences and criminals are seeking to exploit the new technology. We have to be very clear that this is not innovation — this is sexual abuse,” Chiu says in the above statement.

AI, deepfakes, and bad actors…oh my!

As we mentioned here, and by now, you have no doubt heard any of a variety of terms related to this issue; AI, deepfakes, “bad actors,” etc. are popping up all over the net. How much AI fakes have affected pornography and benign picture posting is surely up for conjecture. Still, the San Fran City Attorney claims that the images have been used to “extort, bully, threaten, and humiliate women and girls.”

What is certainly known is that lots of people are viewing and interacting with these images. Chiu claims that the websites he is targeting have been visited a whopping 200 million times during only the first half of this year.

A ‘Swift’ progression

The issue of AI-generated fake images was undoubtedly sparked to a higher flame when fake nudes of Taylor Swift spread through social media sites at the beginning of the year. There was also a famous case in the U.S. state of New Jersey last year, where high school-aged boys grabbed non-nude images of some of their fellow female classmates and through an AI generator were able to create and then post deepfake nude images of those females.

Although the US Congress passed the Disrupt Explicit Forged Images and Non-Consensual Edicts Act or DEFIANCE, as Chiu no doubt knows, and looks to add to, the law overall has been slow to crack down on the posting of deepfake nudes or indeed what charges to level at a website doing so.

What a site reveals might surprise Chiu

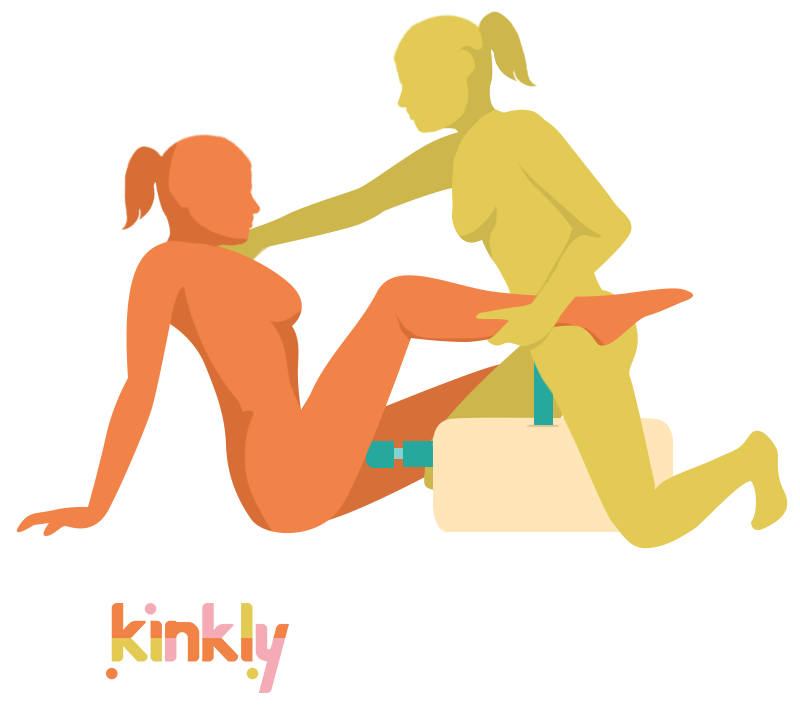

What happens when a website actually promotes the nonconsensual nature of their deepfakes? Surely, this is a brazen display, calling viewers to a site where it explicitly indicates that what they will see are AI-generated images and that they have been loaded without the person in the image giving consent. In the case of at least one site Chiu names, users can “undress an uploaded image by use of the AI technology."

The question then becomes: If an image is technically created by a computer program and furthermore, if a viewer/user renders it pornographic by their use, is the poster of the image (website owner, producer) responsible for presenting a deepfake that wasn’t initially pornographic and wasn't technically created by human hands?

As mentioned here “the continued growth in technology is also likely to cause the expansion of legal issues surrounding AI technology and specifically image generators.” And that continued growth might not stop anytime soon, regardless of what laws come down upon it.